How AI Query Fan-Out Is Reshaping SEO in 2026

Google’s index has always been larger than any human can search; now it is being searched for us, hundreds of times per human prompt, by the very models brands hope to rank in. New large-scale data (72,000+ AI-generated queries, 8,700+ prompts) reveal that a single question to ChatGPT or Gemini routinely triggers 8–10 parallel, hyper-specific queries before an answer is returned.

This process known as query “fan-out” is invisible to the end user, but it is already determining which brands, products and narratives reach tomorrow’s customers. Below, we unpack what fan-out means for enterprise search strategy and how market leaders are operationalising coverage at scale.

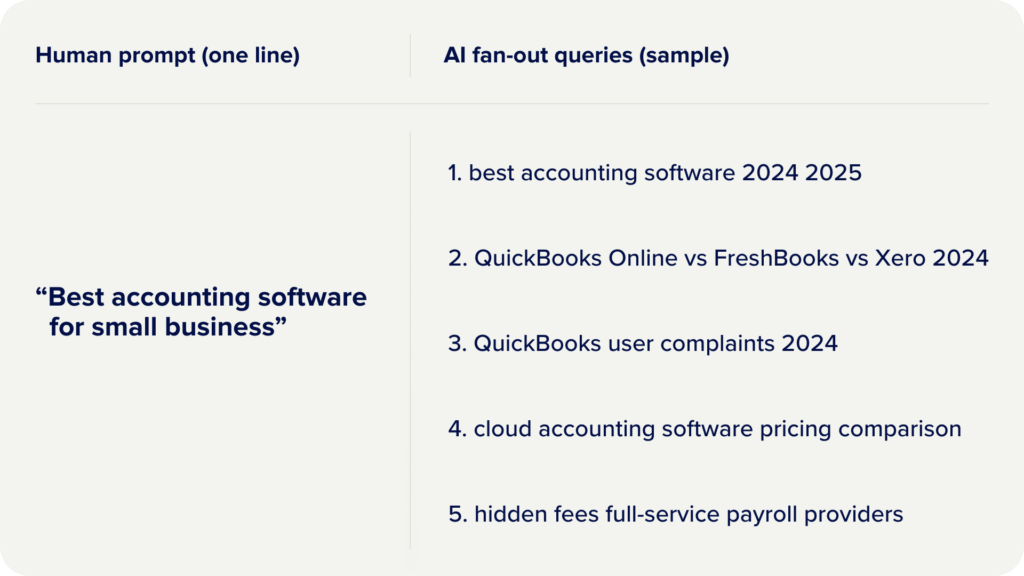

1. What an AI Query Fan-Out Looks Like in Practice

Each sub-query is executed concurrently, often with freshness, review or “vs” qualifiers that barely register in keyword tools: 95 % of fan-out phrases show zero monthly search volume, yet they are the gatekeepers of generative visibility. Put simply, the AI isn’t taking anyone’s word for it. It double-checks, compares notes, and looks for recent signals before it feels comfortable answering. If your brand doesn’t show up in those checks, it doesn’t make the final cut.

2. Why AI Uses Query Fan-Out to Evaluate Results

Fan-out is not a bug; it is due-diligence. LLM Models expand prompts to:

- Pinpoint consensus (reviews, Reddit, professional forums)

- time-stamp knowledge (“2024 2025” appears in 6 % of all fan-outs)

- price-anchor options (“free”, “pricing”, “cost” in top 5-grams)

- risk-balance choices (“pros and cons”, “complaints”, “limitations”)

Only sources that survive this cross-examination surface in the final answer. From the model’s point of view, this is about confidence, not discovery. If an answer can’t be confirmed from multiple angles, it’s treated as risky and quietly filtered out.

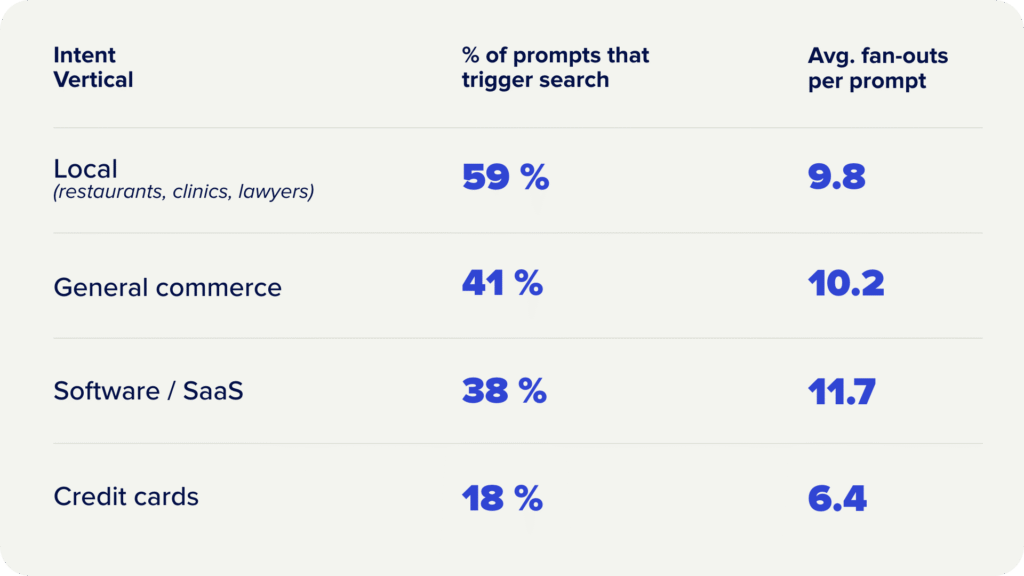

3. How Often? Fan-Out Frequency Varies by Industry

High-consideration categories see deeper interrogation; low-consideration or brand-safe sectors still face 6+ hidden queries. This mirrors how modern LLMs are trained to reduce hallucination risk by cross-checking multiple sources before responding, especially in transactional or trust-sensitive domains. As OpenAI and Google have both noted, generative systems prioritize verification depth over speed when confidence thresholds are higher.

4. The Length Paradox: Longer = More Specific

Average fan-out query length: 5.5 words (ChatGPT) and 9.1 words (Gemini) vs. ~3.4 for classic Google searches. Long-tail is no longer optional-it is the primary battlefield. In practice, this means AI isn’t guessing based on broad keywords; it’s asking smarter, more specific follow-up questions behind the scenes to make sure it gets the answer right. Brands that only optimize for short, high-volume terms simply don’t show up in those deeper conversations.

5. Freshness Signals Are Mandatory

Multi-year patterns (“2024 2025”) occur in one of every sixteen fan-outs, even for evergreen products. If your content calendar ends at the December publish date, you are already behind. AI systems assume that relevance decays faster than humans do, which means they are actively looking for information that has been revisited, revalidated, and updated. Content that hasn’t been touched recently isn’t ignored, but is treated as less trustworthy.

6. Visibility Is Multi-Path, Not Single-Page

Traditional SEO equates ranking Page A with “winning.” In fan-out environments a brand must:

- appear in comparison URLs (“vs”, “alternatives”)

- survive complaint crawls (“problems with Brand X”)

- satisfy pricing crawls (“Brand X pricing”, “hidden fees”)

- validate review crawls (“Brand X reviews”, “G2 Brand X”)

Miss any vector and the model’s confidence score drops-along with your mention. In other words, AI doesn’t reward the best single page; it rewards brands that look consistently credible wherever it checks. Visibility is earned through repetition and validation across many platforms & content types, not by winning one keyword and hoping for the best.

8. Early-Mover Advantage

Because fan-out queries don’t fully show up in keyword tools, most brands aren’t actively competing for them yet. That creates a short window where publishing early really matters. Brands that show up first become the sources AI models learn from and return to over time, creating a compounding advantage. By the time these questions appear in traditional SEO tools, the models have often already formed their preferences, and late entrants are left trying to replace answers the AI already trusts.

9. Key Takeaways for Brands Competing in AI Search

- Generative answers are not won on one URL-they are won on an ecosystem of aligning pages.

- Long-tail is no longer “nice to have”; it is the bulk of AI retrieval.

- Freshness and transparency outweigh perfection-publish early, iterate often.

- PR, product marketing and support must feed SEO the same factual signals (pricing, limitations, use-cases) to survive evaluation crawls.

- Budget for continuous fan-out monitoring the way you budget for rank tracking-visibility now depends on it.

Next Steps

85SIXTY is already running fan-out query extraction for enterprise clients in ecommerce, CPG, retail, travel and hospitality. If your organic footprint is plateauing while AI referrals rise, the gap is almost certainly in the hidden query layer.

Interested? Contact us for your own fan-out coverage audit and roadmap.

85SIXTY is a data-driven digital marketing agency helping global brands own the new multi-query reality.

Leave a comment:

You must be logged in to post a comment.